Apache Spark is a powerful distributed computing system designed for big data processing. To understand its internal working, let’s break it down using an example.

Scenario

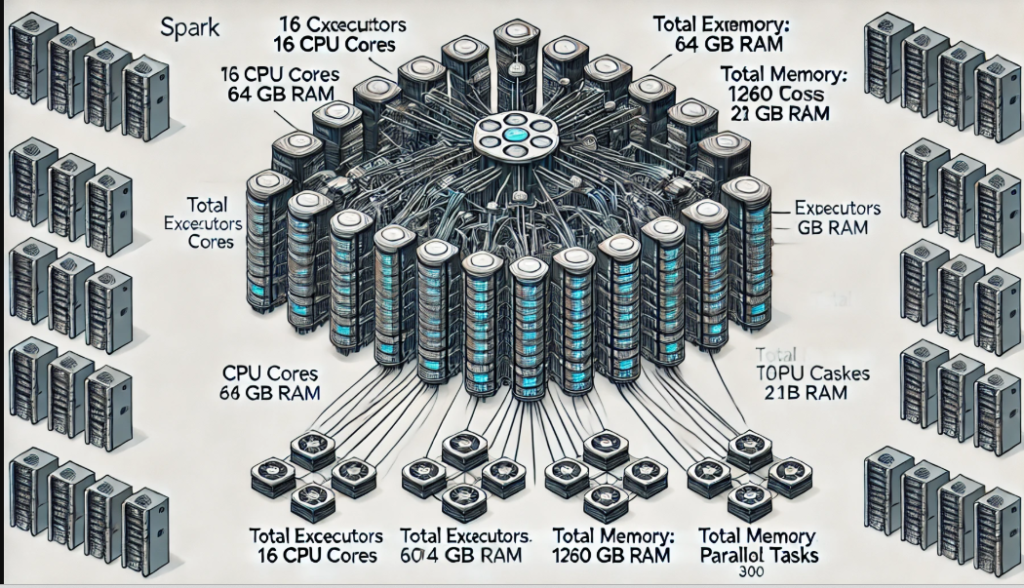

Imagine a Spark cluster with 20 nodes. Each node has the following resources:

- 16 CPU cores

- 64 GB RAM

Each node runs 3 executors, and each executor has:

- 5 CPU cores

- 21 GB RAM

1. Total Cluster Capacity

To calculate the total capacity of this cluster:

- Total executors = 20 nodes × 3 executors = 60 executors

- Total CPU capacity = 60 executors × 5 CPU cores = 300 CPU cores

- Total memory capacity = 60 executors × 21 GB = 1260 GB RAM

This cluster can run 300 parallel tasks since there are 300 CPU cores available.

2. Parallel Tasks with Specific Executor Requests

If you request 4 executors for a job, each with 5 CPU cores, the job will utilize:

- Total cores = 4 executors × 5 cores = 20 cores

- This means the job can run 20 parallel tasks simultaneously.

Example Job: Processing a 10.1 GB CSV File

Suppose you read a CSV file of 10.1 GB stored in a data lake and need to filter some data. Spark will:

- Split the file into 81 partitions, each of size 128 MB (except the last partition, which will be slightly smaller).

- Total tasks = Number of partitions = 81 tasks.

Given 20 CPU cores, Spark executes:

- 20 tasks in parallel during each cycle.

- After completing the first 20 tasks, it processes the next 20, and so on.

This process requires 5 cycles (4 cycles of 20 tasks + 1 cycle of the last task).

Task Execution Time

If each task takes 10 seconds to process 128 MB, the total time for 5 cycles is:

- 10 sec + 10 sec + 10 sec + 10 sec + 8 sec = 48 seconds

Memory Management and Error Possibility

Each executor’s 21 GB RAM is divided as follows:

- Reserved memory: 300 MB

- User memory: 40% (stores user-defined variables/data, e.g., HashMap)

- Spark memory: 60% (divided 50:50 between storage and execution memory)

For execution memory:

- 28% of total memory = 6 GB (approx.)

- Per CPU core = 6 GB / 5 cores = 1.2 GB

Since each task handles 128 MB, this is well within the 1.2 GB per core range, avoiding any out-of-memory errors.