Our data engineering roadmap 2025 is a detailed guide for data engineers. It helps them master cloud technologies and key skills. It covers basics like programming, SQL, and data structures, with a focus on 2025 applications.

This roadmap prepares you to build scalable pipelines and optimize data warehouses. It also teaches how to use AWS and Azure tools well.

It’s for all levels, from beginners to experts. It covers foundational knowledge and advanced topics like distributed computing and real-time processing. It aligns with current trends, helping you face today’s data challenges.

It also shows how to grow your career, from entry-level to data architect. This roadmap is your key to success in data engineering.

Key Takeaways

- Focuses on cloud platforms (AWS, Azure) and core data engineering fundamentals for 2025.

- Includes step-by-step guidance on mastering Python, SQL, and distributed systems.

- Highlights real-time tools like Apache Kafka and data lake architectures for modern pipelines.

- Outlines career progression paths for data engineers aiming for senior roles or specialized fields.

- Emphasizes hands-on projects and performance tuning to meet 2025 industry standards.

Why Data Engineering Is Critical in Today’s Tech Landscape

Data engineering is at the core of today’s tech advancements. Businesses need big data to make smart choices. This makes data engineers, who create scalable systems, essential. By 2025, spending on data analytics is expected to reach $274 billion, increasing the need for these experts.

The Evolution of Data Engineering

At first, data systems used relational databases. Now, they handle unstructured big data from many sources. Key developments include:

| Year | Key Development |

|---|---|

| 2010 | Rise of Hadoop for distributed storage |

| 2015 | Cloud-native tools like Apache Spark |

| 2023 | Adoption of MLOps and real-time pipelines |

The Growing Demand for Data Engineers in 2025

“Data engineering roles grew 35% annually since 2020, outpacing data scientist positions by 15%.” – LinkedIn 2024 Workforce Report

- Projected 12% annual job growth through 2025 (U.S. Bureau of Labor Statistics)

- Average salary for data engineers: $120k-$160k in 2025

- 85% of Fortune 500 companies now prioritize big data engineering teams

How Data Engineering Differs from Data Science

| Aspect | Data Engineering | Data Science |

|---|---|---|

| Main Focus | Infrastructure for data pipelines | Model development and insights |

| Key Tools | Airflow, Kafka, AWS Glue | Python, R, Tableau |

| Primary Goal | Reliable data big data pipelines | Extracting actionable insights |

Data analysts interpret data, while engineers build the systems for analysis. This teamwork is key to data analytics success today.

The Complete Data Engineering Roadmap 2025

Our data engineering roadmap in 2025 is a step-by-step guide to becoming a master. It covers the basics and the latest tech, matching what employers want. Here’s what it includes:

- Phase 1: Prerequisites – Begin with programming, SQL, and data structures. These are the basics for more complex topics.

- Phase 2: Core Concepts – Learn about big data technologies like Hadoop, Spark, and stream processing.

- Phase 3: Cloud Proficiency – Get good at cloud computing on platforms like AWS and Azure. This is for building scalable data pipelines.

- Phase 4: Specialization – Pick a focus like real-time analytics or data governance, based on current trends.

Each phase has practical projects and certifications to show you’re improving. Our roadmap uses the best data engineering courses and real-world examples. It’s flexible—students can focus on cloud computing or review basics as needed.

By 2025, employers will look for engineers who know big data technologies and cloud computing. This roadmap helps you bridge the gap between learning and doing. Start now to meet the industry’s needs.

Essential Prerequisites Before Starting Your Data Engineering Journey

Building a strong foundation is key to mastering data engineering. Before diving into advanced topics, focus on core skills that form the backbone of this field. Whether you’re new to tech or transitioning from another discipline, these prerequisites ensure you’re ready to tackle real-world challenges.

Programming Fundamentals with Python

Programming fundamentals with Python are non-negotiable. Learn to work with data types, loops, functions, and error handling. Libraries like Pandas and NumPy are vital for data manipulation. Practice reading/writing files and automating tasks—skills directly applicable to ETL pipelines and data cleaning.

- Data types and control structures

- File I/O operations for batch processing

- Pandas for structured data wrangling

SQL Mastery: Beyond the Basics

SQL basics evolve into advanced querying. Master JOINs to integrate datasets from relational databases. Use Common Table Expressions (CTEs) for complex analytics and window functions like ROW_NUMBER() for data ranking. These skills optimize interactions with relational databases like PostgreSQL or MySQL, critical for schema design and query performance.

“Understanding window functions can reduce 80% of the need for complex application-layer logic.” – AWS Data Engineering Best Practices

Understanding Data Structures for Engineering Success

Data structure knowledge bridges theory and practice. Arrays and hash tables enable efficient storage solutions. Trees and graphs model dependencies in distributed systems. For example, a hash table’s O(1) lookup speeds up structured data joins in real-time pipelines. Use this knowledge to design scalable architectures.

| Data Structure | Use Case |

|---|---|

| Hash Tables | Fast key-value lookups in caching layers |

| Linked Lists | Dynamic memory management in streaming apps |

| Trees | Hierarchical data storage in NoSQL systems |

Combine these skills with hands-on projects like building ETL workflows or optimizing database queries. Start small—replicate a relational database schema or write a script that merges datasets using Pandas. Every step builds the technical muscle memory needed for professional data engineering.

Core Data Engineering Foundations You Must Master

Mastering key concepts is vital for creating dependable systems. Focus on database management systems, data governance, and data quality. These areas help manage data efficiently and smoothly across different systems.

Begin with distributed computing basics. Tools like HDFS and Apache Spark make data processing fast and reliable. They handle big data storage while keeping data safe.

- Data lake implementation needs organized storage with clear rules. This keeps data quality high during all stages.

- Ingestion tools like Apache NiFi and Kafka make data flow smoothly. Cloud services like AWS Kinesis manage data for various tasks.

- Data warehouse design uses database systems for better ETL/ELT processes. Dimensional modeling boosts data quality and analysis.

Data governance and data management are interdependent for maintaining system reliability in dynamic environments.

Dealing with integration issues requires smart solutions. Focus on data governance to avoid data silos and ensure quality. These basics are the foundation for more advanced topics like cloud platforms and real-time processing.

Specialized Skills That Will Define Successful Data Engineers in 2025

Data engineers in 2025 need to know the latest tools for handling big data. Big data frameworks and

Distributed Computing and Big Data Processing

Apache Spark leads in distributed systems, making data processing with big data frameworks easier. It supports PySpark for Python users, making data pipelines better with RDDs and Spark SQL. Key parts include:

- Apache Spark for big tasks

- PySpark for Python users

- Spark SQL for structured data

Data Lake Architecture and Implementation

Data lakes hold raw data in unstructured forms. The medallion architecture sorts data into bronze, silver, and gold levels. Here’s a comparison of data lakes and data warehouses:

| Feature | Data Lake | Data Warehouse |

|---|---|---|

| Data Type | Raw data | Structured |

| Use Case | Exploratory analysis | Reporting |

| Storage | Cloud storage (S3, ADLS) | Columnar storage |

Data Warehouse Design and Optimization

Boost data warehousing with columnar storage and materialized views. Use partitioning and indexing to speed up data processing. Good practices include:

- Columnar storage optimization

- Materialized views for faster queries

Real-time Data Processing with Kafka and Structured Streaming

Kafka manages real time data processing streams. Pair it with Spark Structured Streaming for full solutions. It’s great for:

- IoT sensor data

- Financial transaction analytics

NoSQL Databases and When to Use Them

NoSQL databases like MongoDB and Cassandra handle non-relational data. Pick one based on your needs:

| Type | Database | Use Case |

|---|---|---|

| Document | MongoDB | Flexible schemas |

| Key-Value | Redis | Fast access |

| Column-Family | Cassandra | Big data analytics |

| Graph | Neo4j | Relationship networks |

AWS Platform Essentials for Modern Data Engineers

Learning AWS tools is vital for data engineers. They help build scalable cloud solutions. These services are key for efficient storage, processing, and analysis at scale.

“AWS provides the foundation for data engineers to innovate without compromising on performance or scalability.”

Amazon EMR for Large-Scale Data Processing

Amazon EMR speeds up big data processing with Hadoop, Spark, and Flink. Use auto-scaling and spot instances to manage costs and efficiency in batch jobs.

Data Warehousing with Amazon Redshift

Amazon Redshift is great for analytics with its columnar storage and large-scale performance. Use sort keys, dist styles, and concurrency scaling to improve query efficiency.

Serverless Analytics with AWS Lambda and Athena

Use Lambda for event-driven compute and Athena for serverless SQL queries. This combo analyzes S3 data without managing infrastructure. It’s perfect for ad-hoc analytics and real-time triggers.

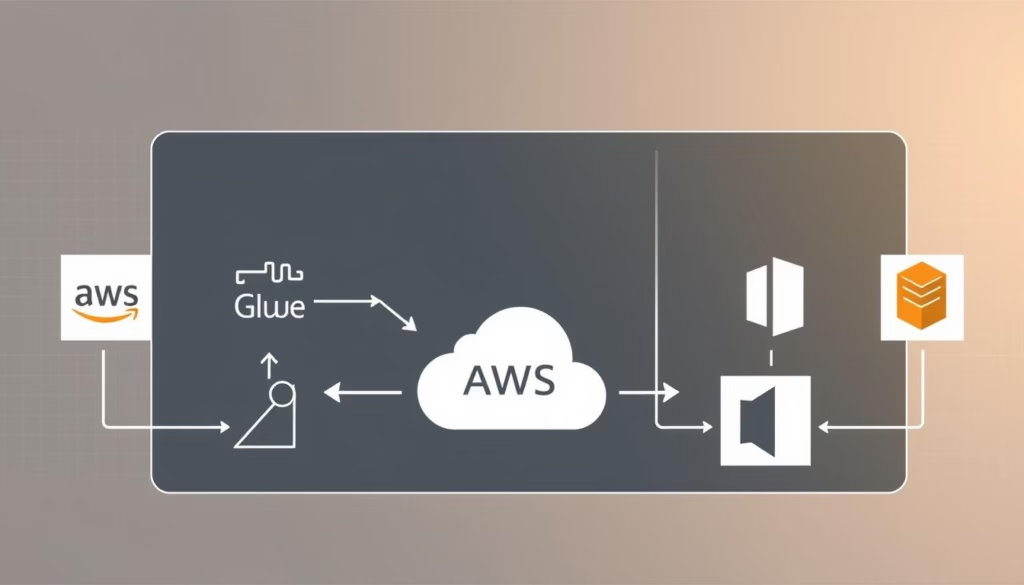

AWS Glue for ETL Processes

AWS Glue automates ETL workflows. It has a managed crawler, job scheduler, and built-in catalog. It works well with Redshift, S3, and Delta Lake formats.

S3 and EC2: The Foundation of AWS Data Infrastructure

Amazon S3 offers object storage with 11 storage classes for lifecycle management. EC2 instances handle compute-heavy tasks like ETL jobs and data transformations.

| Service | Primary Use | Key Features |

|---|---|---|

| EMR | Batch processing | Hadoop/Flink support, auto-scaling |

| Redshift | Data warehousing | Columnar storage, concurrency scaling |

| Athena | Serverless analytics | Direct S3 querying, pay-per-query pricing |

| Glue | ETL automation | Managed workflows, Python/Spark support |

| S3 | Data lake storage | 99.999999999% durability, 11 storage tiers |

Using these tools together creates complete pipelines. They use cloud computing strengths. Focus on integrating services for the best results with AWS.

Microsoft Azure: The Rising Star in Data Engineering

Azure offers top-notch tools for data engineers to create scalable solutions. Azure data lake storage and Azure data factory are key for modern data pipelines. The Azure data engineer associate certification shows who knows how to use these tools well. Let’s see how Azure helps teams be innovative.

“Azure’s unified approach to data processing reduces complexity and accelerates time-to-insight,” noted industry experts during recent cloud summits.

- Azure Data Lake Storage Gen2 makes data management easier with its features. It’s great for hybrid and multi-cloud setups.

- Azure Data Factory simplifies complex tasks. It offers serverless computing and works with on-premises systems for full data management.

- Azure Synapse Analytics connects analytics and data warehousing. It supports T-SQL and Spark jobs in one place.

- Azure Databricks helps teams work together. It supports Delta Lake and collaborative notebooks for data engineering.

Choosing the right Azure setup depends on your project needs. Getting certified as an Azure data engineer shows you can use these tools well. With Azure’s tools, teams can make data solutions that grow with the business.

Building Your Portfolio: Essential Data Engineering Projects

Practical projects are key to showing you know data engineering. They prove you can make theory work in real life. Choose projects that meet industry and employer needs.

End-to-End Data Pipeline Project

Build a data pipeline that gets, changes, and saves data. Use Apache Airflow for managing and Spark for processing. Make sure data quality is good by checking inputs and outputs.

Examples include putting social media data into a database or handling IoT sensor data.

| Component | Tools |

|---|---|

| Data ingestion | Kafka, REST APIs |

| Transformation | Spark, Flink |

| Storage | Amazon S3, Redshift |

Real-time Analytics Dashboard

Show your data visualization skills with dashboards on Grafana or Tableau. Use live data from stock tickers or online shops. Also, show how you keep data safe during processing.

Data Integration and Migration Project

Make a data integration system to move old databases to new cloud places. Use AWS Glue or Talend for this. Share data quality and migration plans to ensure smooth moves.

Each project should have documentation, code, and demo videos. Show data pipelines in GitHub to catch hiring managers’ eyes. Focus on being clear and easy to follow to stand out.

Advanced Career Paths: From Data Engineer to Data Architect

As you move up in your career, becoming a data architect is a big step. This part talks about how to use your data engineering skills to get into more important jobs in system design and strategic planning.

Specialization Options for Senior Engineers

Senior engineers have three main choices:

- Data Architect: They focus on system design for big data systems, including data mesh and large datasets management.

- Data Platform Lead: They oversee teams and work on making data pipelines run smoothly.

- DataOps Specialist: They help teams work better together, including engineering, analytics, and DevOps.

Skills for Top-Tier Companies

Top companies look for people with:

- Good skills in performance tuning for big systems

- Ability to solve problems with algorithms and prepare for system design interviews

“Candidates with strong system design portfolios get better data engineer salary at FAANG companies.” – Tech Industry Report 2024

Mastery of Advanced Concepts

Being an expert in performance tuning means:

- Finding slow spots in huge large datasets

- Improving ETL pipelines with cloud tools

System design interviews check if you can scale microservices and data mesh systems. Staying up-to-date in these areas helps you become a leader.

Conclusion: Your Next Steps on the Data Engineering Journey

As you wrap up your 2025 plan, make sure CI/CD pipelines are top priority. This ensures your data apps are ready for production. Use tools like Apache Airflow, Prometheus, and Grafana to make your workflow reliable. This gives you real-time insights and keeps your systems stable.

First, check your skills against the guide’s core competencies. Look for gaps in areas like distributed systems, data warehouse design, or multi-cloud knowledge. This helps you focus your learning.

Think about how knowing multi-cloud can boost your career. AWS and Azure now need you to work in hybrid environments. This skill is key. Also, learn about data science to improve your problem-solving skills.

Start with small projects, like using Airflow for workflow automation or Redshift for analytics. Then, move to more complex pipelines. Use CI/CD tools to track your progress and reach each goal.

The need for data systems ready for production is high. Focus on both technical skills and operational details. Stay up-to-date with data governance and cloud tools to keep your skills sharp.

FAQ

What is the role of a data engineer?

A data engineer designs and builds systems for handling big data. They make sure data is ready for others to analyze. This helps in making important decisions.

What skills are essential for becoming a successful data engineer in 2025?

To be a top data engineer, you need to know programming languages like Python and SQL. You should also understand data modeling and cloud computing. Knowing about big data and data pipelines is key too.

How do data engineers differ from data scientists?

Data engineers focus on the data infrastructure. They build and improve systems for data. Data scientists, on the other hand, analyze data to find insights. Both roles are important but different.

What technologies should I learn to become an Azure data engineer?

To be an Azure data engineer, learn about Azure Data Lake Storage and Azure Databricks. Also, get to know Azure Data Factory and Azure Synapse. Knowing how these work together is crucial.

What is a data pipeline and why is it important?

A data pipeline moves data from one place to another. It makes sure data is always ready for analysis. This helps in making quick, informed decisions.

What is the importance of data quality in data engineering?

Data quality is very important. It affects the accuracy of insights. Good data quality helps in making better decisions. Data engineers work to ensure data is reliable.

How can I improve my skills in data analytics?

To get better at data analytics, practice with tools like SQL and Python. Try working on projects that involve analyzing data. This will help you grow your skills.

What career pathways exist for data engineers beyond entry-level positions?

Data engineers can move up to roles like data architects or machine learning engineers. They can also become leaders or focus on improving systems. These paths are available in top companies.

Why is learning cloud computing essential for data engineers?

Cloud computing is key because companies are moving to cloud platforms. Knowing these platforms helps engineers create efficient solutions. It makes data processing and storage better.

What types of projects should I include in my data engineering portfolio?

Your portfolio should have projects like data pipelines and analytics dashboards. Include data integration and migration projects too. Show the details of your work to impress employers.

What emerging trends should data engineers be aware of?

Data engineers should keep up with trends like data governance and AI in data engineering. Staying current ensures you stay relevant and adaptable in the field.