Generative AI is transforming industries by enabling groundbreaking applications, and AWS is leading this revolution with its newly launched Amazon EC2 Trn2 instances and Trn2 UltraServers. These cutting-edge compute solutions are purpose-built for the demanding requirements of generative AI workloads, providing unmatched performance and efficiency.

Why Choose Amazon EC2 Trn2 Instances and UltraServers?

Unmatched Performance for Generative AI

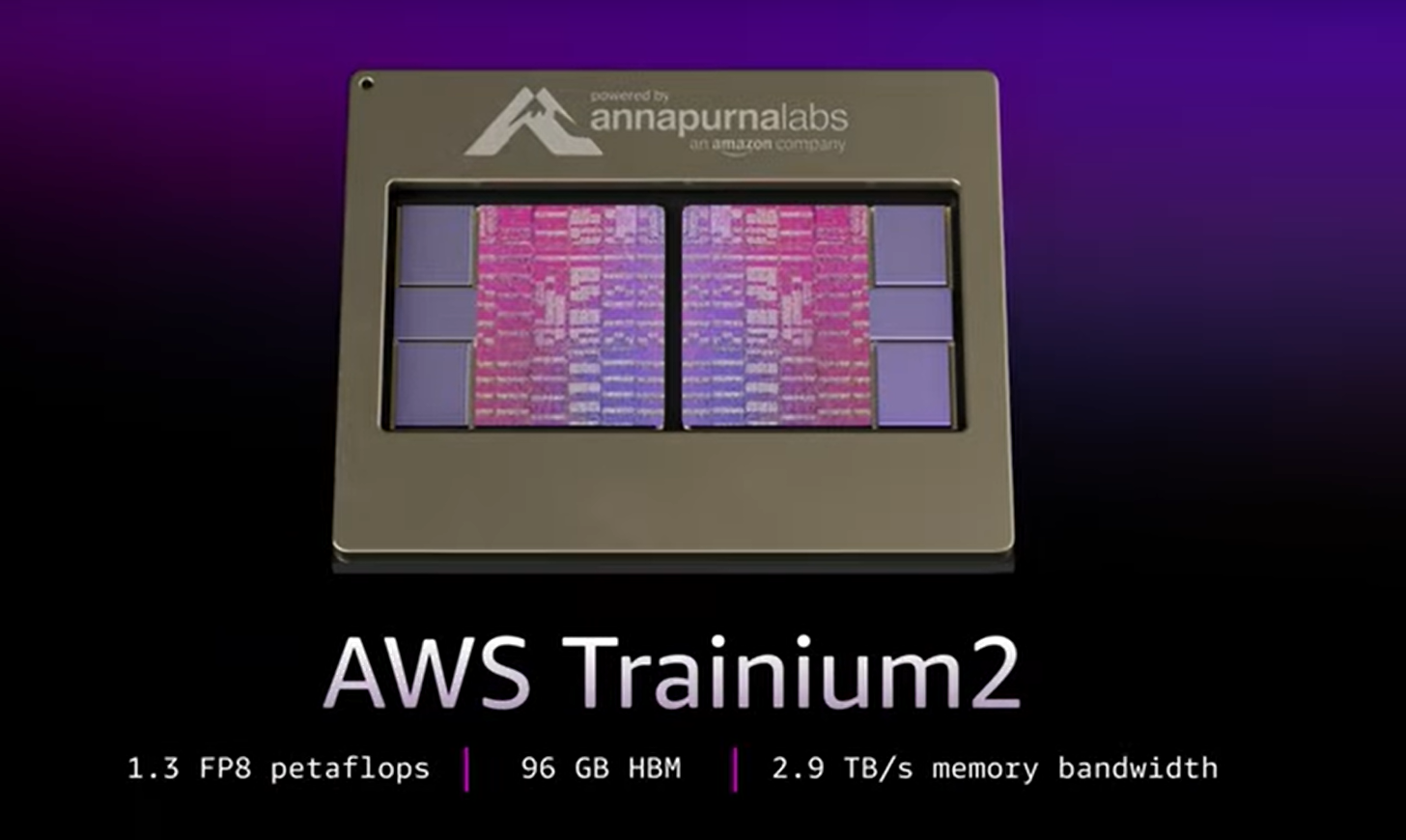

Amazon EC2 Trn2 instances, powered by 16 AWS Trainium2 chips, are the most powerful EC2 instances for training and deploying massive models with hundreds of billions or even trillions of parameters. These instances deliver 30-40% better price performance compared to the current GPU-based EC2 P5e and P5en instances, helping you reduce training times, iterate faster, and deploy AI models efficiently.

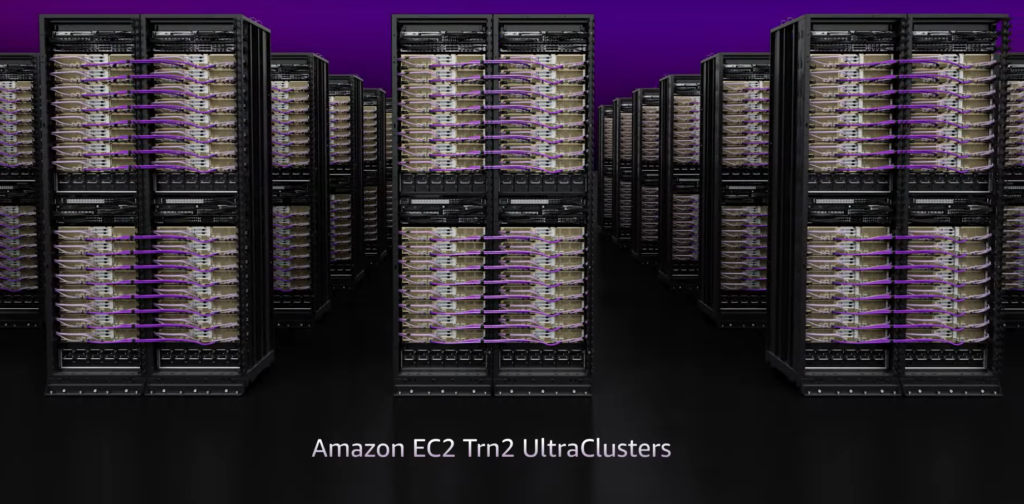

UltraServers for Unprecedented Scale

For workloads requiring more compute and memory than a single instance can deliver, Trn2 UltraServers combine 64 Trainium2 chips across four Trn2 instances using AWS’s proprietary NeuronLink technology. This configuration quadruples compute, memory, and networking bandwidth, enabling breakthrough performance for training and inference of ultra-large models.

Key Benefits

- State-of-the-Art Training and Inference

- Up to 20.8 FP8 petaflops of compute per Trn2 instance.

- Up to 83.2 FP8 petaflops in UltraServers.

- Support for large language models, multimodal models, and diffusion transformers.

- Cost Efficiency

- Trn2 instances offer a 30-40% cost advantage over GPU-based instances, significantly lowering expenses.

- Scalability and Security

- With up to 12.8 Tbps of EFAv3 networking in UltraServers, these instances support scale-out distributed training of the largest AI models.

- Built on the AWS Nitro System, ensuring secure, reliable scaling across thousands of Trainium chips.

- Energy Efficiency

- Trn2 instances are 3x more energy efficient than Trn1, aligning with sustainability goals.

Advanced Features

- Massive Compute and Memory: Up to 6 TB of shared accelerator memory with 185 TBps of total memory bandwidth.

- High-Speed Networking: EFAv3 networking bandwidth enables ultra-fast data transfer for distributed AI workloads.

- Optimized for AI Frameworks: Native support for PyTorch, JAX, and libraries like Hugging Face ensures seamless integration.

- Neuron SDK: Includes optimizations for distributed training, inference, and debugging, accelerating time to market.

With native support for popular machine learning frameworks and tools, Amazon EC2 Trn2 instances and UltraServers make it easy to build and deploy state-of-the-art AI applications. The AWS Neuron SDK, along with integration with services like Amazon SageMaker and third-party platforms like Ray, Domino Data Lab, and Datadog, simplifies the development pipeline.

Conclusion

Amazon EC2 Trn2 instances and UltraServers represent the next step in AI compute evolution, offering unparalleled performance, scalability, and cost efficiency for generative AI applications. Whether you’re building large language models or real-time AI-powered experiences, these solutions empower you to innovate faster and smarter.

You might like to see the AWS re:Invent 2024 highlights.